Operating System

OS1.1 harnesses the built infrastructure to extend our sensory registers. We have collaboratively designed a lighting network linked by air. As mechanical wooden inputs are touched and turned, lighting outputs change and fluctuate. Manipulation of the physical apparatus directs sensory phenomena along new pathways.

Tools:

Electronics; Solidworks; 3D Printing; CNC

Team:

Alexia Asgari, Selin Dursun, Skye Gao, Danning Liang, Quincy Kuang, Kai Zhang

With this “Architectural Knob”, we integrated a control system to the built environment.

This “architectural knob” is located at the entrance of the Harvard Graduate School Of Design building, a space with heavy foot traffic and countless serendipitous social interactions. As people pass through the space and notice this unfamiliar contraption , their minds start to ponder its purpose. Some pause to touch, only to discover that it is a giant Knob that rotates around the pillar. Each “knob” indirectly affects the direction of a different light source in some unknown pattern. A serendipitous discovery!

As people congregate around the pillar throughout the day, different behaviors begin to emerge, some people started syncing light sources together and experimenting with color blending, others directed the lights behind the strangers walking by, attempting to influence the way they traverse through the space. It became a game with many unspoken rules.

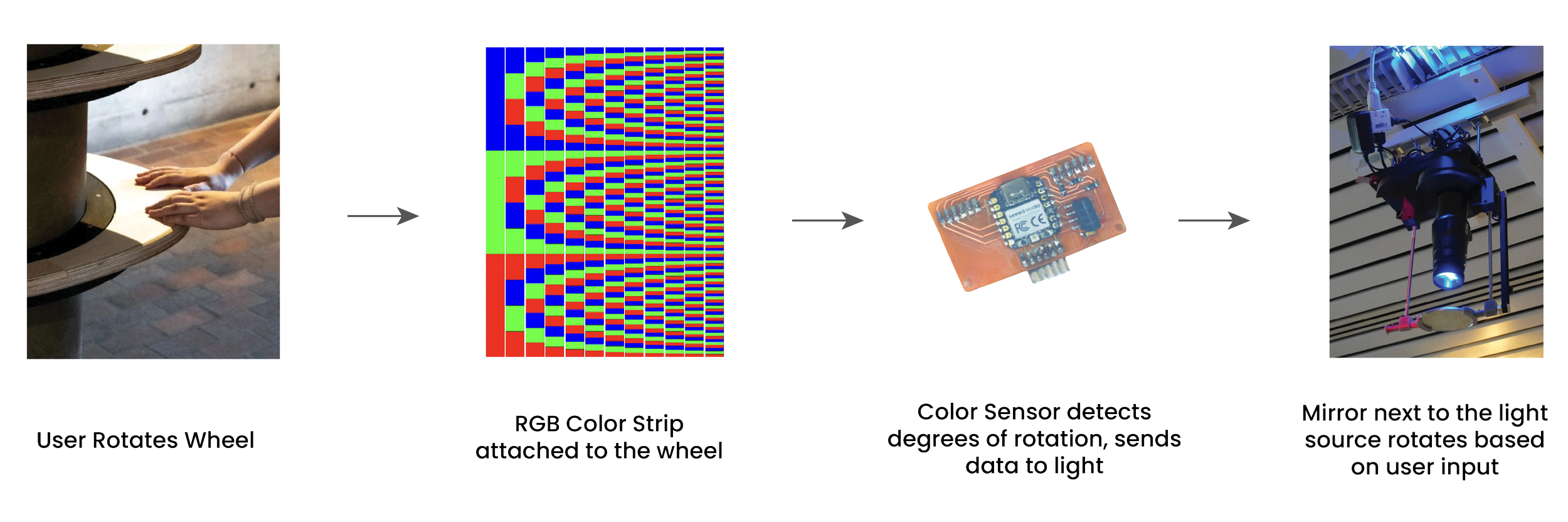

How it works

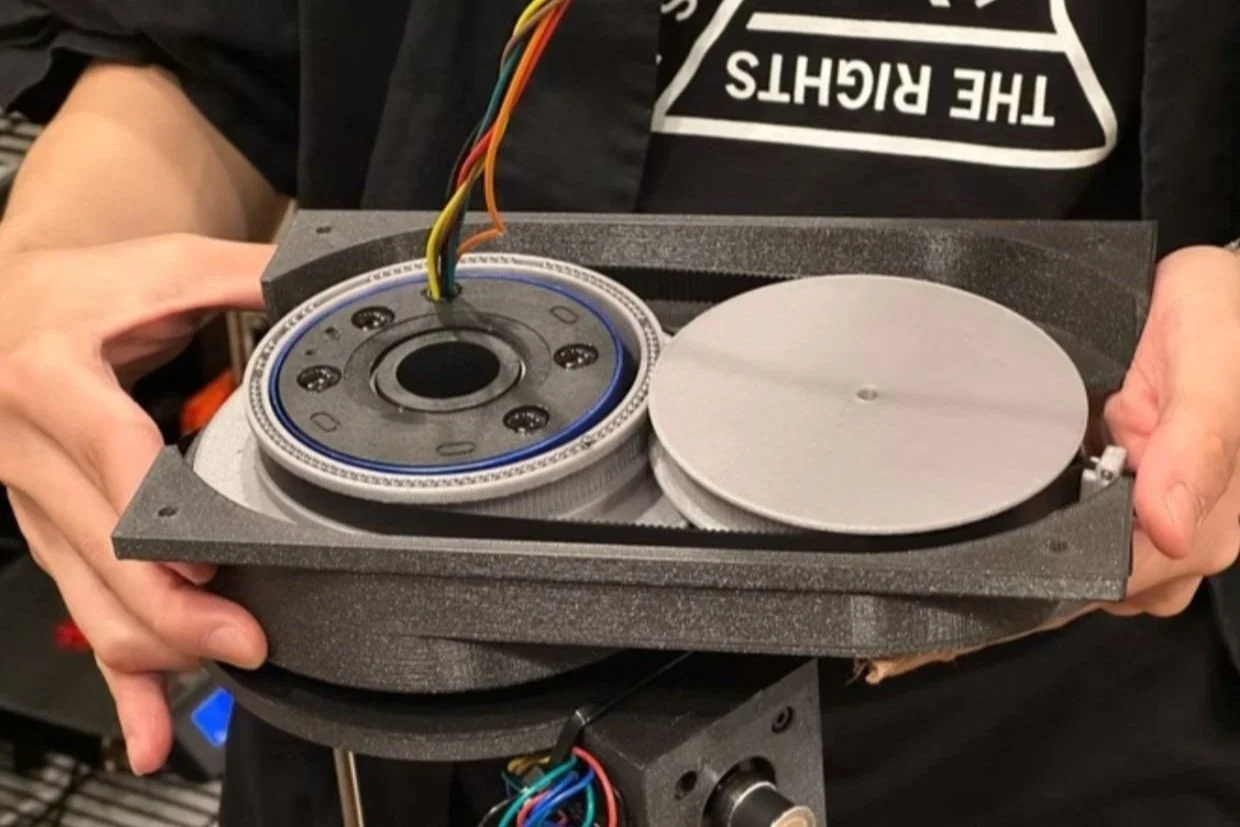

Each input module on the pillar is in fact a giant bearing/rotary encoder. As users rotate the ring, the integrated RGB color code travels past a static color sensor that detects the degrees of movement, sends signal to the light source, mapping the motion to the rotation of the mirror near the light, thus driving the light around the space.

Experiments

Before arriving at the final design, we experimented with light positioning through mirrors and mechanical actuation. The experiments were at different scales and involved different levels of user input.

We installed these actuatable devices at the Yale motion capture lab and brought in participants to interact and “perform” with the gadgets. There are two actuation devices, one (1.1) slightly more intricate than the other (1.3). We observed how users tested the constraints of the contraptions, discovered new ways of using the device, and came up with ideas of engaging with each other and the audience.

This experiment provided a clearer direction for us in building the final experience. We wanted to focus on the point of disconnect between the input and output, creating points of intrigue for users to figure out the cause and effect between the two. Simplicity of the input was important for us. As expressive as the gadgets we tested in the motion lab, it was much more about the intricacy of the mechanical actuation than the light with the body. Therefore, we pivoted to create something much more subtle, simple, and integrated with the site of the building.